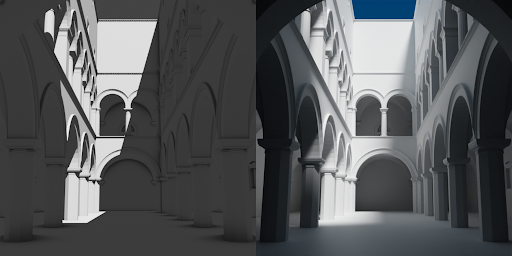

Fully photo-realistic visuals are not easy to achieve, and sometimes they are not even necessary. As it turns out, simply adding some degree of realism to an overall stylized image is enough to significantly improve its appearance. For example, complex lighting can do - and actually does - wonders even for the simplest scenes.

Image: Same scene, same lighting setup, completely different results. The only difference is the algorithm for computing lighting: simplified on the left and realistic on the right. The model in the scene is Sponza Atrium by Marko Dabrovic

The same is true for animation: even a completely over-the-top stunt looks better when it seems like something that could possibly happen in real life.So how can we improve the realism of our works?

Traditionally, this has been done by studying references and carefully copying real-life examples. But technology continues to advance. Today we know that making something realistic means making it conform to the laws of physics. And with the knowledge we now possess, we are able to apply said laws to our creations almost directly.

Physics and CG

Nowadays, physics is used everywhere in computer graphics. One of the best examples is so-called “physical-based rendering”: an advanced method of visualizing images that takes into account the flow of light as well as the reflectivity and other characteristics of the surfaces in the image.This method, while being somewhat more computationally intensive than its predecessors, is able to produce much more lifelike and aesthetically pleasing images than simpler and more traditional approaches.

Scene lighting also benefits greatly from using physically-accurate approaches, as we’ve demonstrated at the beginning of the article. Even post-processing effects such as lens flares or depth of field are nothing more than an attempt to imitate real-world phenomena.

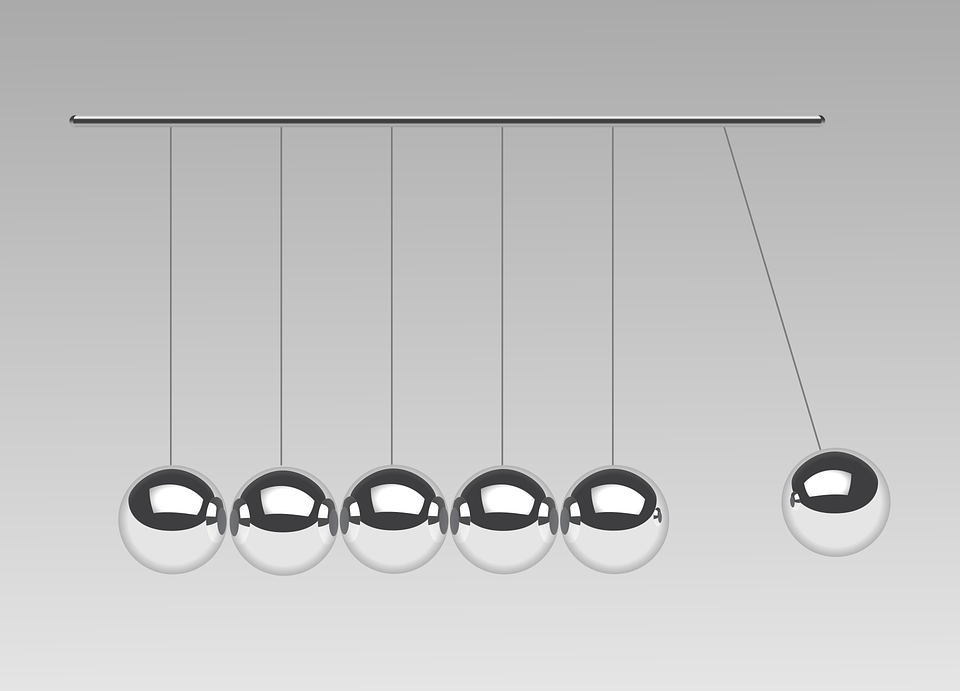

In addition to image rendering, physics is widely used to make things move. The most simple - and perhaps oldest - example of this would be basic rigid body simulation.

Here we have basic geometric shapes to which some basic laws of physics - primarily gravity and friction - are applied. Scenes like these were first introduced in pre-rendered works like commercials, movies and TV shows. However, this kind of physical simulation does not require overly complex calculations, so as the technology advanced, they started to appear in real-time applications as well - and are still widely used.

A similar, but more advanced example would be fluid dynamics. Here, a large number of physical bodies are used to approximate the movement of a body of water:

In contrast to the previous example, scenes like these do indeed require quite a lot of processing power. For a long time this limited their applicability to pre-rendered media, although today simplified versions start to make their way into real-time.

But while things like these can look very impressive, the core of any narrative work is its characters. Animating characters is not an easy task, especially when the animation has to look realistic. So how can we apply physics to character animation?

Physics and Characters

Historically, the first attempt to combine character animation and physics was the so-called ragdoll physics. The idea here is similar to the simple rigid body physics we’ve already seen: several geometric shapes are linked together to roughly approximate the character’s body. Simulation is then run for these bodies to calculate how the character would move in free fall. The resulting motion is then transferred to the character.

Just like regular physical simulations, ragdoll can be used in both pre-rendered and real-time sequences with equal ease.

The limitation of this approach is obvious: it is not supposed to replace hand-made animation, only to supplement it - and sometimes to enhance it, but in a limited way. The purpose of ragdoll is to automate some of the character motions, namely those which do not require a precise result.

This is the main downside of simple simulations like these: they can’t really be controlled. There is no real way to tell how a scene will turn out until it is executed. This is fine - and might even be preferable - for things like falling, but not so much for more complex actions.

Still, when set up properly, ragdoll can provide some impressive results, and at the time it was considered a major achievement in combining physics with animation. Even today, while not as omnipresent as before, it is still widely used. But what’s more important: it was this technique that led to another, more advanced solution.

Physics Tools for Character Animation

The next logical step was to integrate physical simulation directly into character animation.

Traditionally, the classical animation approach did not include any physics tools. The way characters moved was decided solely by people who animated them. And even though animators sometimes relied heavily on real-life references, their main goal was always artistic intention.

Furthermore, the traditional animation pipeline did not really allow integrating any kind of physical simulation into itself. And this did not really change even when this pipeline was adapted for 3D animation. To this day, animation is expected to be done manually. Only rarely do some top-skilled animators use something like a center of mass controller.

Recently, however, it started to change. Increased levels of visual fidelity have led to increased demand for realism in animation which in turn called for all sorts of experiments to integrate physics with character animation. Today we have several concurrent approaches to this task:

The first is exemplified by solutions like Ziva VFX (https://zivadynamics.com/ziva-vfx):

Image: R&D Dino Breakdown by GhostVFX (https://ghostvfx.com/)

This is a plugin for Maya (a popular 3D graphics solution) designed to simulate soft tissues such as skin, fat and muscles. It works by giving the 3D artist means to define what these tissues are, how they are connected to the bones and to each other - and then the software calculates how they would move, deform and interact when the bones move. In a way, this is a ‘reverse’ solution: muscles do not move the bones like they do in real life, but are themselves moved along with the bones.Even though this kind of simulation does not affect the animation itself, applying it to any animation greatly enhances the impression it has on the viewer.

But impressive as it is, this effect still relies on already existing animation. So logically the next step should be using physics for generating poses and motions themselves.

A different approach is to use physics to calculate actual character poses. This is the approach we use in our own software Cascadeur. The general idea here is similar to ragdoll physics: the character is represented as a number of interconnected rigid bodies which are used to determine how the character’s body would react in any given pose. This data, however, is not used to simply make the character fall: instead, it is mixed with the already existing character animation. Doing this adds a sense of weight and inertia to the animation, and makes it look much more lifelike.

Although a method like this does not generate any animation by itself, it greatly simplifies the task of achieving physically accurate motions - and what’s just as important, all while retaining full control over the end result. In a way, it is the best of both worlds.

Another way it can be done is by using AI to actually move the character. This approach also uses a simplified representation of a character that resembles a ragdoll setup, but it also relies on a reference animation to ‘know’ how the character is supposed to move and otherwise behave. The system uses the data it acquired from the reference to try and make the physical model perform a specific task such as moving to a certain location, grabbing or hitting a certain object and so on. This process is almost entirely automated and has virtually no user input aside from providing references and setting up the task.

Image: DeepMimic project by Xue Bin Peng, Pieter Abbeel, Sergey Levine and Michiel van de Panne (https://xbpeng.github.io/projects/DeepMimic/index.html).

Moreover, the end result can be easily customized so that there are virtually infinite variations, making this approach quite useful for many tasks. For example, it can be used to make alterations to pre-existing motions, or to produce many variations of the same motion.

However, there are downsides to this approach as well. A significant amount of time is required to train a character to perform some action, the results can be unstable, and errors in the training algorithm are not always easy to fix. Furthermore, the above-mentioned ‘no user input’ means little to no control over the end result. In the future, this approach might very well grow into one of the most interesting techniques for character animation, but currently it is still more a proof of concept than a tool fitting for practical use.

So as we can see, there are many ways to use physics in the animation pipeline. And each one of these solutions can - if used correctly - produce highly impressive results. All of them are designed for different purposes, and bizarrely enough this is exactly what they all have in common. Currently, there is no single, all-in-one solution for producing physically correct animation: only several smaller-scale solutions intended for specific cases. Physics-based animation is not yet a mature concept, but rather a collection of techniques. And that is understandable: this field is still very new; even the idea of using physical laws to enhance or generate animation is not yet something that is widely accepted.

But exactly because it is so novel, physics-based animation has been showing a huge degree of progress in recent years. And it shows no signs of slowing down. In time, it may lead to the appearance of more generalized animation solutions - but what we can be certain about is that it will surely bring us some new and exciting physics tools.

Our team in particular has plans for adding a lot of new features in future versions of Cascadeur.

What the Future Holds

First of all, we intend to continue refining already existing features of the software. This includes significant improvements to the Secondary Motion tool: its future versions will be able to emphasize the character’s motions even better than the tool currently does.We also plan to add several new features, one of which will be the Retiming. This will be a physics-based tool capable of changing the length of a given motion - or of some of its parts - making the resulting animation more physically accurate as well as more expressive and accentuated.

Image: Retiming at work in Cascadeur

The AutoPhysics tool will receive some updates as well. Now the program will be able to smooth changes in the character’s Angular Momentum, making actions look more natural. Another addition will be an option to preserve global positions and rotations for the selected body parts, making possible to animate physically accurate punches and kicks in mid-air.

Finally, the way the physics simulations are applied to the existing animation will be revised as well: it will become a much more seamless process, without distortions that do occasionally happen in the current versions.

All this will significantly advance the applicability of the already versatile instrument.

Conclusion

And that’s it: this is the current state of physics in animation. The story does not end here, though. As technology continues to progress at a rapid pace, we can be certain that the future holds even more impressive achievements in this field.We hope you’ve found our little overview to be insightful and interesting. If you’d like something to add, or have a thought to share, please don’t hesitate to leave a comment in our forum.