App2Top Interview: Eugene Dyabin talks about the future of Cascadeur and neural networks

In this recent interview with game development magazine App2Top.com, Cascadeur creator Eugen Dyabin tells about his further plans, about the challenges that Nekki's animation tool is currently facing, and, most importantly, about its integration with neural networks. We publish a part of the original interview in our blog.

Alexander Semenov, Editor-in-Chief of App2Top - Eugene, hello! Look, since December I wanted to talk to the Cascadeur team on the topic of graphical neural networks. By the way, how do you feel about them in general?

Eugene Dyabin, founder and chief producer of Cascadeur - We treat them with great interest and even use them in our own development. Speaking globally, I do not share the panic about the sudden technological revolution with the disappearance of many professions. The advent of cameras has not reduced the number of artists, and the advent of cameras with auto-tuning in each phone has not reduced the number of professional photographers and operators, although it has changed their work.

In addition, these new neural networks have many problems and limitations. If you try to put them into practice, it greatly cools the first impression. Let's see how fast the progress will be.

In December, Cascadeur had an official worldwide release after a year of beta. What challenges did you face as a service after that?

Eugene - The release led to a large influx of users, which significantly increased the load on our support team. There were a lot of questions, but the number of Pro subscribers remained the same for now, and it's not so easy to scale them - they need to be well versed in the program and technical details.

That's why we're working on a comprehensive FAQ to speed up support overall - but also to prioritize support for Pro users: in the future, when they buy a license, they will immediately receive a link to a closed channel with direct contact to the development team. In general, we are optimizing the support process right now.

It was also the first time we encountered the fact that some companies need to buy 40 licenses at once, for example. So we needed to finish this feature urgently. Also, it turned out that 20% of our users are students or employees in education, so now we also need to speed up the process of issuing educational licenses.

You're probably collecting feedback. Based on it, what are users usually missing in Cascadeur today?

Eugene - Users are missing thousands of little things, and it's impossible to do everything at once. So we have to prioritize those features that we think are most requested or most important.There are regular but complex requests like facial animations or blendshapes that need to be done sooner or later, but so far we just don't have enough human resources.

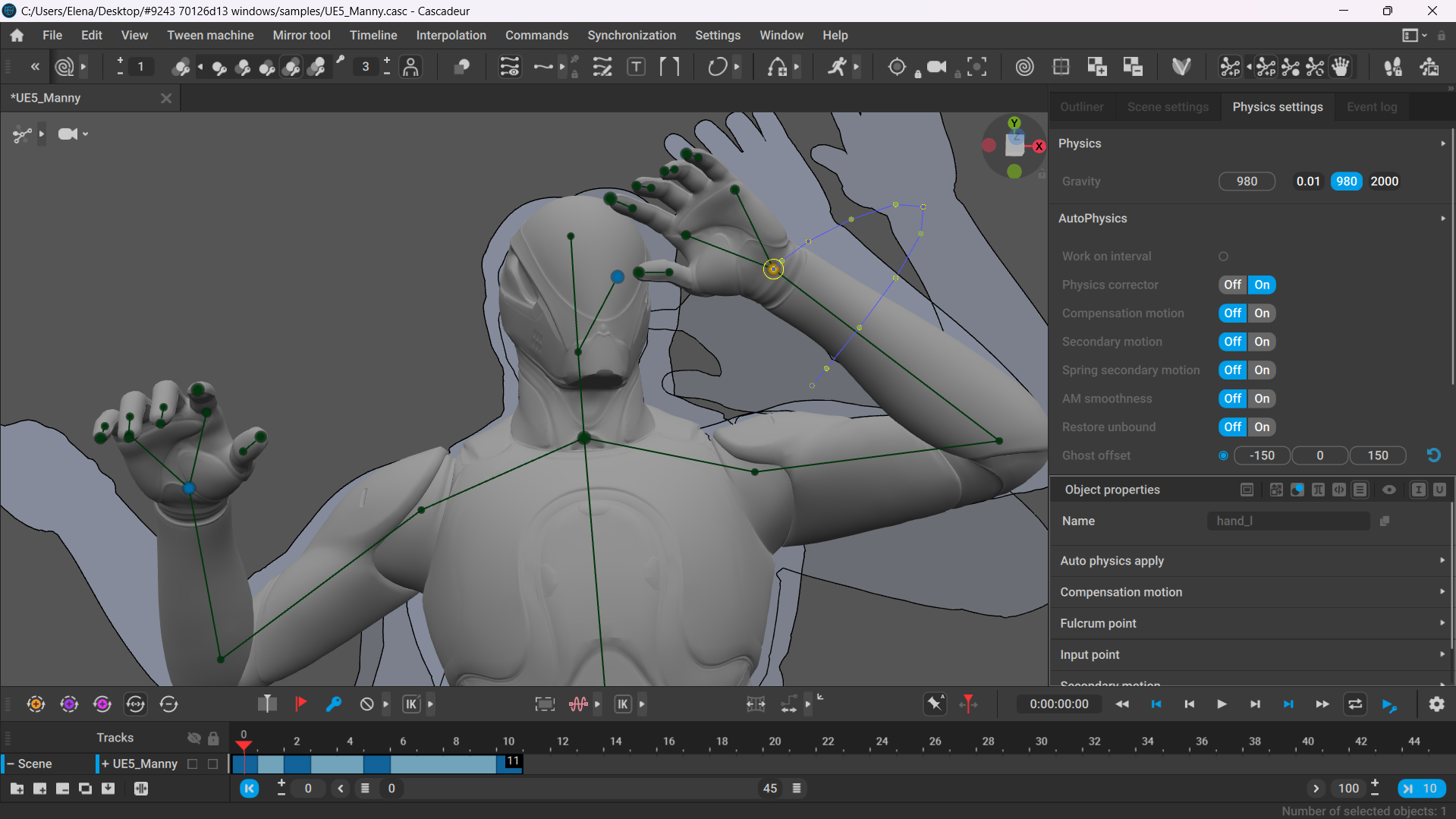

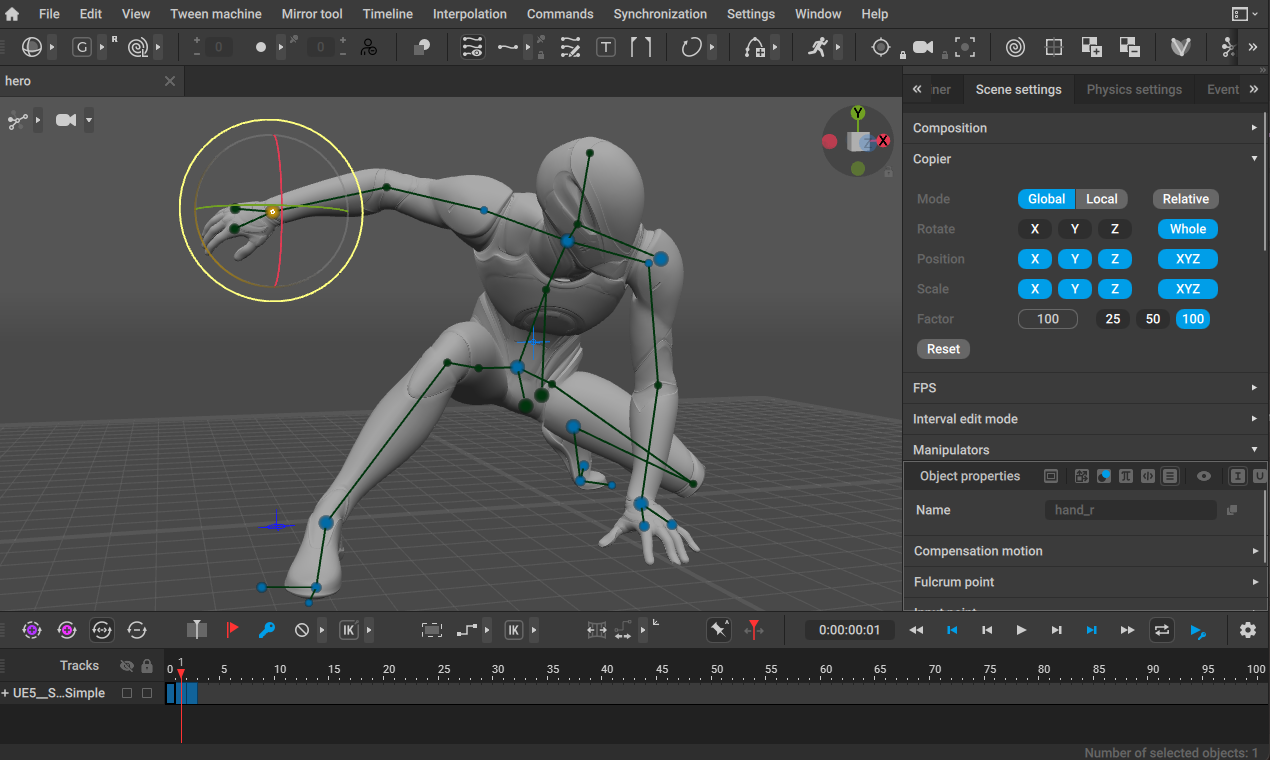

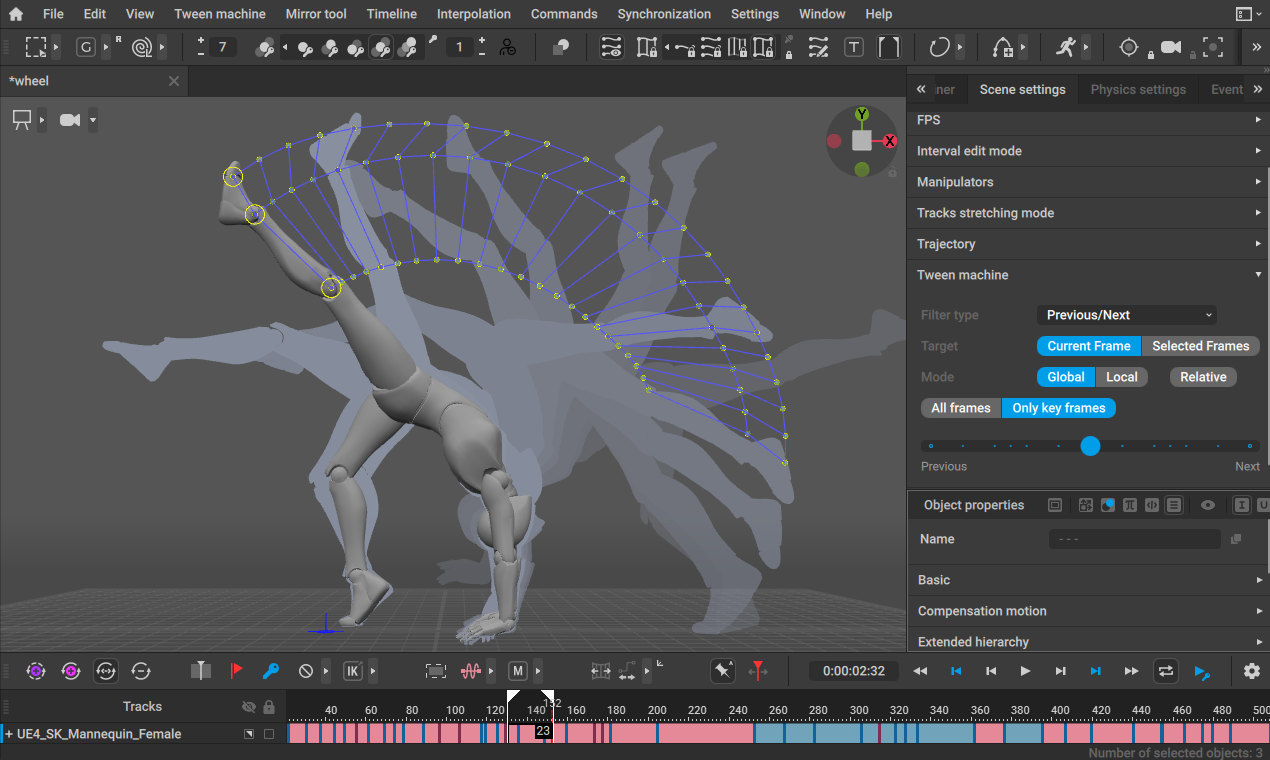

As for our main theme - physics - many users expect ragdoll, collisions and interaction with the environment. Our AI AutoPhysics specializes in correcting dynamic balance in movements, correcting trajectory and rotation in jumps, adding secondary movement - an by that greatly improving the quality of animation and speeding up work on it. So far, though, there isn't the possiblity to push off walls, climb ledges, or interact with other characters. But we are actively working on that as well.

Some users have complained about the awkwardness of using the character's fingers, because you have to rotate each finger limb individually. But with the 2023.1 update, we'll be adding automatic finger positioning - a smart rig that makes it very easy to control fingers with multiple controllers.

When I saw what the Stable Diffusion - ControlNet - Blender bundle was capable of, I immediately thought of Cascadeur. Unlike Blender, it is focused on posing, and I am sure that its entry threshold is less than that of Blender (and it is definitely more convenient than the basic functionality of ControlNet). In this regard, the question is: is it worth waiting for SD support in Cascadeur?

When I saw what the Stable Diffusion - ControlNet - Blender bundle was capable of, I immediately thought of Cascadeur. Unlike Blender, it is focused on posing, and I am sure that its entry threshold is less than that of Blender (and it is definitely more convenient than the basic functionality of ControlNet). In this regard, the question is: is it worth waiting for SD support in Cascadeur?

Eugene - We immediately thought of it too! And not only we.However, only we have the most convenient tool for controlling the pose of the figure, suitable for beginners and amateurs. We just need to add a neural rendering based on Stable Diffusion, which will turn a 3D model into a finished 2D image in any style. We're already working on that.

We've always wanted to reach a wider audience, but the problem with Cascadeur is that it doesn't provide a finished end product, it's just a link in the production chain. But Neurorendering can remove that barrier for a broad audience, especially in the mobile version of Cascadeur. You download the mobile app, set a relatively natural pose with AutoPosing, choose the camera angle, upload an image of a character, and get that character in the pose you want from the angle you want.This gives much more creative control over the outcome than is possible with a text description. So far we're only talking about images, but one day we'll get to videos, and that's when Cascadeur's advantages will be in full effect.

The first neural networks capable of making videos are already appearing. Two or three years and, most likely, we will see the first full-fledged short films. But, will they be built on the principle of text-to-animation or on the principle of text+animation-to-animation, where an animated mockup will also act as a promt?

Eugene - So far I am skeptical that in the near future neural networks will be able to generate high-quality 3d animation without relying on physical simulations. We consider text-to-animation as a primary draft that we can clean, correct poses and physics – and get high-quality animation that the user can edit using our tools. I can't imagine how, without additional control, you can get the necessary result from the text alone, except in the most trivial cases.

If you add video neurorendering to this, which will become possible sooner or later, then the tool turns out to be quite magical. You describe the idea in your own words, get a realistic enough version, edit it and at the output you have a ready-made video with the right character in any style. But so far this is all a concept, and implementation is still far away.

Cascadeur itself was built on the basis of neural networks.In other words, you have been in this topic for a long time. However, have you used them only as part of training your service? Or have you experimented with it somewhere (in other areas)?

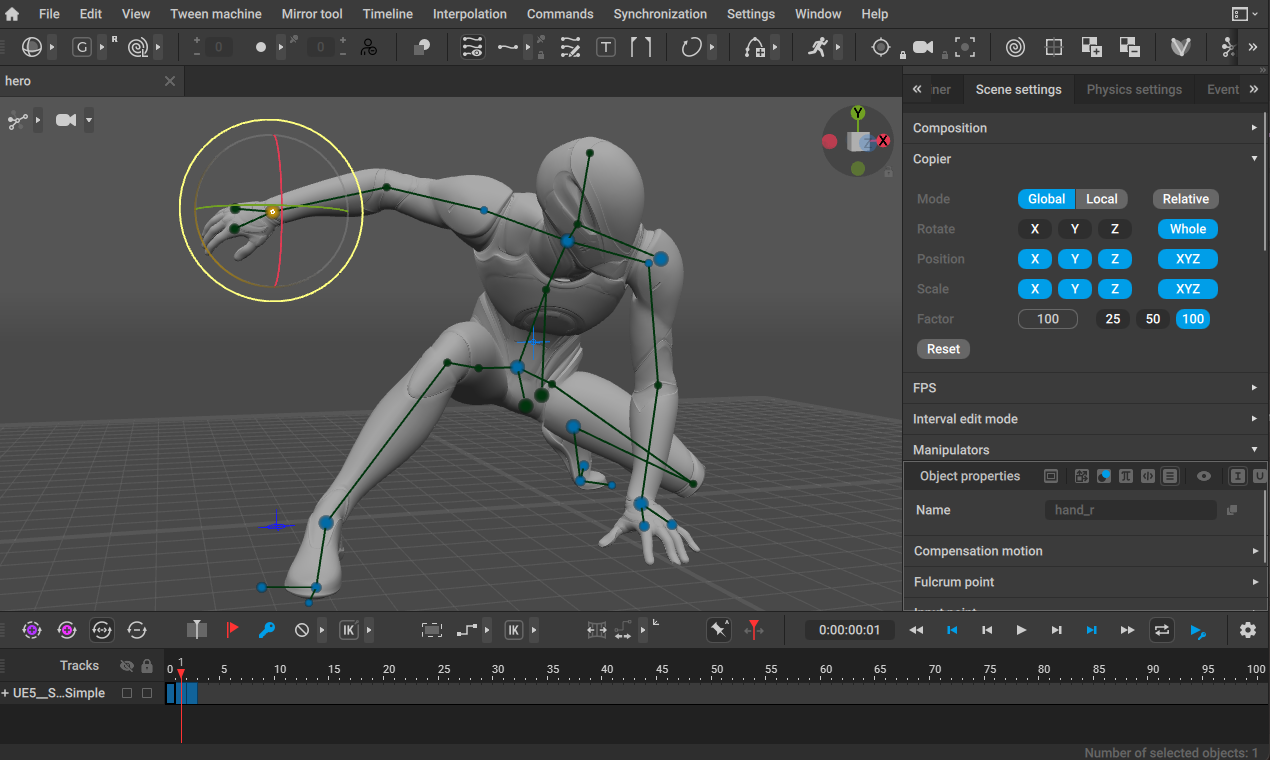

Eugene - First of all, I want to note that so far there are more physics in Cascadeur than neural networks, and this is the main difference from most of the solutions we know for generating animation. We started using neural networks a few years ago and achieved the greatest success in the AutoP osing tool, which helps to make a natural pose with the least amount of user actions.

If we talk about our company Nekki as a whole, neural networks have been used in other projects for different tasks.As an example, I can name the bots in Shadow Fight 4: Arena. They have been trained with real player battle recordings and are able to control different characters using their special techniques and tactics characteristic of these characters.

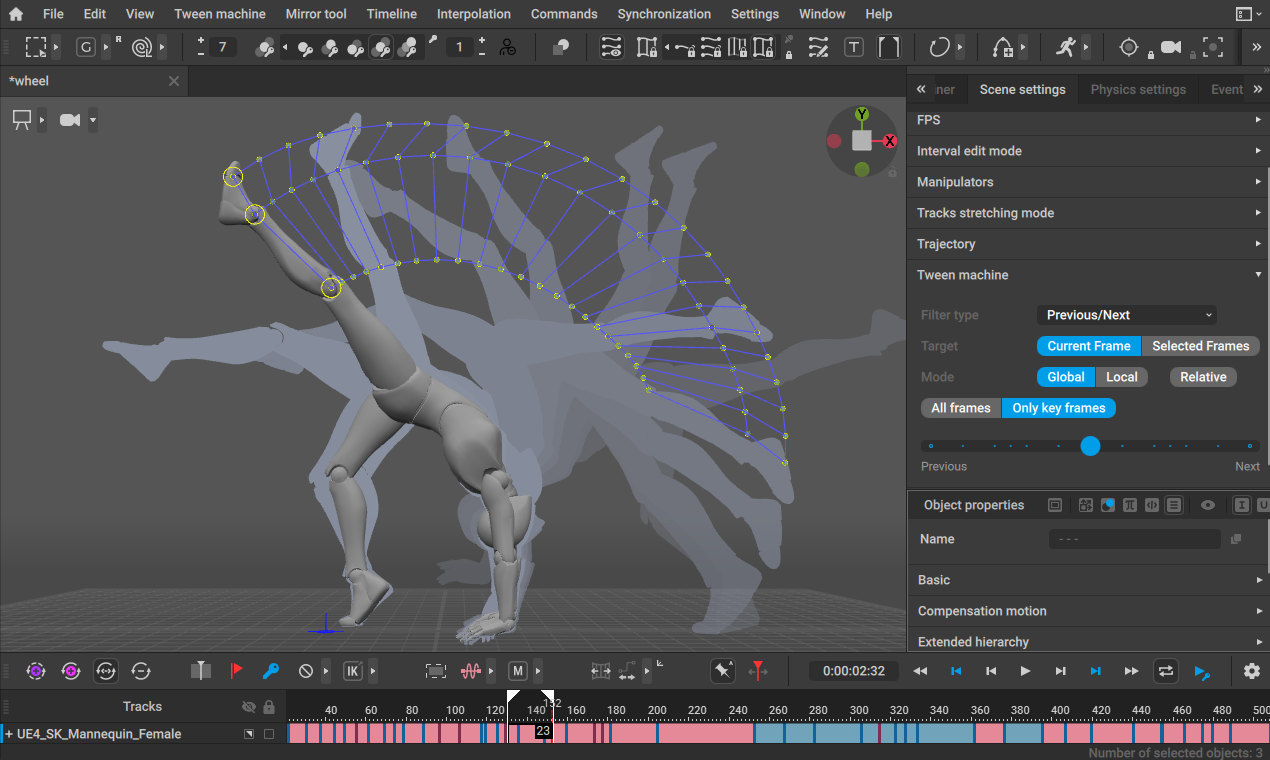

Many animations in Cascadeur are done like this: a video clip is loaded into the program as a template, according to which the animator reproduces key frames. How realistic is it within the framework of your service to implement a model that will be ready to make a draft animation based on video alone? Are you working in this direction?

Eugene - Yes, we are working on it - it's something like video mocap. Already in the version 2023.1 of Cascadeur there will be the first alpha version of this feature.I t is not yet possible to achieve perfect quality, but as a reference animation draft, in which there are at least key poses and timings, this can save a lot of time. We will further develop and optimize this feature. It works slowly on the client computer, so on the long run we need to transfer it to the server – then we will be less limited by the user's hardware performance.

Back to business: now people are saying that investors want to invest like crazy in AI. Have you had that experience? What are these investors waiting for? And do they understand that they are essentially competing with Microsoft, Google and Adobe in this space?

Eugene - I think there is no competition here with such giants.Large companies invest in the technology itself, and small companies and startups try to use this technology in different areas. Even we don't develop our neural rendering or video mockup from scratch, but use available libraries and models that we configure and retrain for our tasks. I have the impression that investment in such projects will now increase sharply. Cascadeur's development is fully funded by Nekki, but we are also open to proposals. .

Cascadeur itself was built on the basis of neural networks.In other words, you have been in this topic for a long time. However, have you used them only as part of training your service? Or have you experimented with it somewhere (in other areas)?

Eugene - First of all, I want to note that so far there are more physics in Cascadeur than neural networks, and this is the main difference from most of the solutions we know for generating animation. We started using neural networks a few years ago and achieved the greatest success in the AutoP osing tool, which helps to make a natural pose with the least amount of user actions.

If we talk about our company Nekki as a whole, neural networks have been used in other projects for different tasks.As an example, I can name the bots in Shadow Fight 4: Arena. They have been trained with real player battle recordings and are able to control different characters using their special techniques and tactics characteristic of these characters.

Many animations in Cascadeur are done like this: a video clip is loaded into the program as a template, according to which the animator reproduces key frames. How realistic is it within the framework of your service to implement a model that will be ready to make a draft animation based on video alone? Are you working in this direction?

Eugene - Yes, we are working on it - it's something like video mocap. Already in the version 2023.1 of Cascadeur there will be the first alpha version of this feature.I t is not yet possible to achieve perfect quality, but as a reference animation draft, in which there are at least key poses and timings, this can save a lot of time. We will further develop and optimize this feature. It works slowly on the client computer, so on the long run we need to transfer it to the server – then we will be less limited by the user's hardware performance.

Back to business: now people are saying that investors want to invest like crazy in AI. Have you had that experience? What are these investors waiting for? And do they understand that they are essentially competing with Microsoft, Google and Adobe in this space?

Eugene - I think there is no competition here with such giants.Large companies invest in the technology itself, and small companies and startups try to use this technology in different areas. Even we don't develop our neural rendering or video mockup from scratch, but use available libraries and models that we configure and retrain for our tasks. I have the impression that investment in such projects will now increase sharply. Cascadeur's development is fully funded by Nekki, but we are also open to proposals. .

By the way, did you expect such attention to neural networks that they received after the popularization of MJ, SD and ChatGPT?

Eugene- A few years ago we already understood that the future lies in neural networks and their implementation in various tools, so we began to recruit data scientists and engage in research and development of our tools in order to be ready for the coming revolution. But the successes of Midjourney and ChatGPT still surprised us and gave us new hopes and ideas like neural rendering, text-to-animation and others.

Are you ready to make a prediction? What can be expected in the context of AI technologies, including your own, at least until the end of the year

Eugene - I think that the world and the market will not change as quickly as many people fear, but most importantly, investments will change, which reflects the belief in a certain future... In the coming year, I expect to see the emergence of intelligent search and a change in the approach to tutorials - soon people will be able to receive personalized help and instructions for their tasks. Generative networks will help to prototype and try different ideas faster, but in most cases they will not be able to produce the final result.

As far as we're concerned, we think of AI as an assistant that speeds up the work of an animator who knows what he wants.Motion detection based on reference, autoposing, autophysics, neurorendering - all of these are primarily designed to shorten the time between the idea and the result, but at every stage the animator has full control and can make any changes. I think that by the end of the year we will already be able to introduce new AI features.

And two technical questions: when I familiarized myself with the limitations of the free version, I found that the user can only export models with "300 images and 120 joints". For those who are not familiar with the animation, explain how much is it?

Eugene - The idea behind the restrictions is to allow amateurs and indie developers to use Cascadeur for free without sacrificing important features.300 frames at 30 frames per second is 10 seconds. That's more than enough for game animations, but not enough for long cutscenes. Also, 120 points is enough for almost any character, but not enough for multiple characters or for very fancy characters. .

When can we expect Godot support?

Eugene - We will do that after we add support for glTF format and USD format. We are already working on this.

Are you ready to make a prediction? What can be expected in the context of AI technologies, including your own, at least until the end of the year

Eugene - I think that the world and the market will not change as quickly as many people fear, but most importantly, investments will change, which reflects the belief in a certain future... In the coming year, I expect to see the emergence of intelligent search and a change in the approach to tutorials - soon people will be able to receive personalized help and instructions for their tasks. Generative networks will help to prototype and try different ideas faster, but in most cases they will not be able to produce the final result.

As far as we're concerned, we think of AI as an assistant that speeds up the work of an animator who knows what he wants.Motion detection based on reference, autoposing, autophysics, neurorendering - all of these are primarily designed to shorten the time between the idea and the result, but at every stage the animator has full control and can make any changes. I think that by the end of the year we will already be able to introduce new AI features.

And two technical questions: when I familiarized myself with the limitations of the free version, I found that the user can only export models with "300 images and 120 joints". For those who are not familiar with the animation, explain how much is it?

Eugene - The idea behind the restrictions is to allow amateurs and indie developers to use Cascadeur for free without sacrificing important features.300 frames at 30 frames per second is 10 seconds. That's more than enough for game animations, but not enough for long cutscenes. Also, 120 points is enough for almost any character, but not enough for multiple characters or for very fancy characters. .

When can we expect Godot support?

Eugene - We will do that after we add support for glTF format and USD format. We are already working on this.